Static hosting with AWS and CloudFlare

NOTE: This isn't really meant to be a guide or a tutorial. Just an account of everything I did to make this work and everything I learned along the way. I'm sure I'll want to set up more static sites in the future so I'm writing down my process now before I forget

I used to host all my static websites with Dreamhost.com. It is so easy.

- Register a domain

- Click two buttons to add a free cert

- Open up the web file browser

- Drop in my files

- It works

- I could also click two buttons and install Wordpress but I'm past those days now

Dreamhost was great but it was expensive. I think I was paying around $120 a year for a hosting plan. I can't find that plan on their pricing page anymore, looks like a single site now is $7 a month. I still care about two static sites I own (this one and another one). If I wanted to use Dreamhost, that would be $168 a year. Not for me. I wanted something cheaper.

I decided to switch to AWS for hosting and use CloudFlare for protection. Now my costs are 50 cents a month per site totaling just $12 a year.

I am sure there are countless other static hosting sites that could do it cheaper or easier than AWS + CloudFlare. Like CloudFlare Pages (https://pages.cloudflare.com/) or Netlify (https://www.netlify.com/) or GitHub Pages (https://pages.github.com/). Whatever. I don't care. I like learning how to use AWS and like that I can start doing more complicated things in the future if I want. I also have never used CloudFlare before so this was a good excuse. I also use AWS for work so this is another good excuse to learn more.

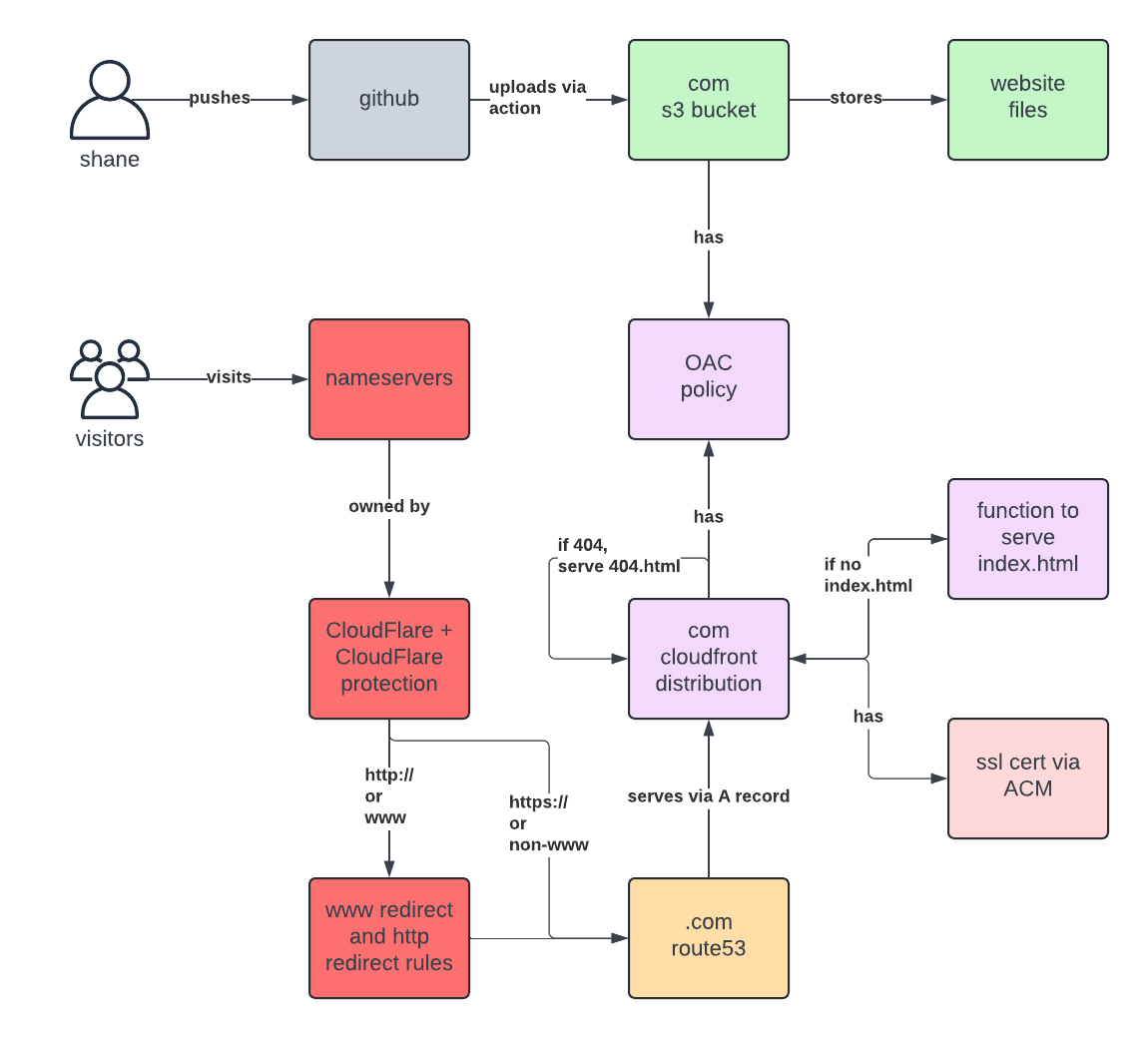

Here is everything I did to set up shanebodimer.com resulting in this architecture:

Requirements

Moving off Dreamhost, I lost a lot of features. I wanted to keep these core things:

- simple upload process

- a static website

- SSL cert

- protection against spam/ddos/scripts

-

redirects

- www to non-www

- http to https

- 404 handling

-

remove .html from pages (I don't want

/static_hosting_with_AWS_and_cloudflare/index.html, I want clean/static_hosting_with_AWS_and_cloudflare)

My domain was already registered in Route 53 on AWS (rip Google Domains) so I didn't have to worry about that.

A simple upload process

This is straightforward enough. AWS S3 is the obvious place to upload

files. I created a bucket titled shanebodimer.com.

Dragging and dropping files into the bucket is easy but I wanted something even easier. Doing 2FA to get into AWS everytime I want to upload is annoying, it needed to be easier :)

To accomplish this, I used GitHub Actions. I copied this action template from here: https://medium.com/@olayinkasamuel44/how-to-deploy-a-static-website-to-s3-bucket-using-github-actions-ci-script-fa1acc932fbd

name: deploy static website to AWS-S3

on:

push:

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v4

- name: Set up AWS CLI

uses: aws-actions/configure-aws-credentials@v4

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1

- name: Deploy to AWS S3

run: |

aws s3 sync . s3://shanebodimer.com --delete

Pretty simple. I push to my repo, this action uploads it to S3 and overwrites the existing files. As soon as I finish writing this, I am going to git push, and it will be live. Too easy.

I don't think I ran into a single error setting this up.

A static website

Ok now that I have the files on S3, I need to serve them!

My first tutorial was the classic Static Website with S3 (https://docs.aws.amazon.com/AmazonS3/latest/userguide/WebsiteHosting.html) but this only results in an ugly S3 URL to access the sites and the bucket had to be public. This is bad and I'll explain later.

My second tutorial was Static Website with Custom Domain (https://docs.aws.amazon.com/AmazonS3/latest/userguide/website-hosting-custom-domain-walkthrough.html) but that required me to make two buckets (why??), there was no SSL cert, and it still required the buckets to be set to public.

Finally, I found a tutorial to serve a static site with SSL using CloudFront: https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/getting-started-secure-static-website-cloudformation-template.htm

It was using CloudFormation to deploy which I wanted to avoid for these reasons:

- I didn't want all the extra logging

- I wanted to fully understand all the resources I was creating

- I wanted to fully understand the costs of everything I was creating

- I wanted a challenge in the future to create a CloudFormation stack from scratch

- I think blindly cloning CloudFormation templates without 100% understanding how a system should work is bad practice. So much gets abstracted. CloudFormation is great at scale and obvious for doing something like this, but it forces devs to skip over learning the basics. You must do it manually first. Using CloudFormation to spin up resources you haven't fully understood yet is like using a calculator to do calculus before learning addition. Maybe I am being dramatic.

I created a CloudFront distribution following the basic settings:

- cname

shanebodimer.com - Created custom cert for

shanebodimer.com - Picked the recommended policy

- Set default root object to

index.html - Logging off, IPv6 on

For the Origin (where CloudFront gets the files to serve), I created a

new S3 Origin and picked my shanebodimer.com S3 bucket.

For origin access, I created a new

Origin access control called shanebodimer.

OAC is so important because it let's only my CloudFront distribution talk to my S3. If my S3 bucket was set to public like the other tutorials suggest, anyone can directly access the S3 bucket endpoint and start downloading files. This could skyrocket my AWS bill. OAC forces any requests to my files to go through CloudFront.

OAC created this policy for me with s3:GetObject. I added

the s3:ListBucket to help with 404s. When you request a

route, sometimes it was 404ing. ListBucket fixes that I think. Now

CloudFront is able to actually see what is in a folder rather than

trying to serve the folder as an object. At least I think that's how

it's working. I think this is a result of this behavior:

https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/DefaultRootObject.html#DefaultRootObjectNotSet. I could probably remove it now that I have additional routing rules

but whatever.

{

"Version": "2008-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

{

"Sid": "ServeFiles",

"Effect": "Allow",

"Principal": {

"Service": "cloudfront.amazonaws.com"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::shanebodimer.com/*",

"Condition": {

"StringEquals": {

"AWS:SourceArn": "arn:aws:cloudfront::{my aws account number}:distribution/{my cloudfront distribution}"

}

}

},

{

"Sid": "ListBucketToKnow404",

"Effect": "Allow",

"Principal": {

"Service": "cloudfront.amazonaws.com"

},

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::shanebodimer.com",

"Condition": {

"StringEquals": {

"AWS:SourceArn": "arn:aws:cloudfront::{my aws account number}:distribution/{my cloudfront distribution}"

}

}

}

]

}

Cool. Now my CloudFront is the only thing that can access my S3 bucket and I have a cert.

I don't remember what I did to connect my domain name. I think AWS just walked me through selecting the domain for CloudFront and it automatically added the A record to point to CloudFront.

Protecting (with CloudFlare)

CloudFront is variable pricing meaning an increase in traffic is an increase in costs. If I were to run a bad script, or someone else runs a script, my CloudFront distribution could get hammered with millions and millions of requests and I could get charged A LOT. I don't want this. I need some sort of protection to filter out spam traffic.

CloudFront offers protection called WAF (Web Application Firewall): https://docs.aws.amazon.com/waf/latest/developerguide/cloudfront-features.html. It does exactly what I want:

Keep your application secure from the most common web threats and security vulnerabilities using AWS WAF. Blocked requests are stopped before they reach your web servers.

BUT it costs like $14 a month for 10,000,000 requests and there are additional monthly costs for having it enabled ($5 a month to have, $1 a month per rule, etc.)

Also I think Shield is better suited for DDoS protection but that's like $3,000 a month? and I have to sign up for a year? https://aws.amazon.com/shield/

CloudFlare can do all this for free!

I made an account with CloudFlare, added my domain, and changed the NameServers on AWS to point to CloudFlare. Within like 5 minutes everything showed connected.

Now, all requests to my site go through CloudFlare first which has security enabled. I can pick any of these levels that will automatically detect and block bad requests: https://developers.cloudflare.com/waf/tools/security-level/#security-levels

I could also talk about how great everything is cached around the world via CloudFlare and CloudFront but I don't care about that. I get like 3 hits a month on my website and they are probably bots lol. I don't care to talk about caching.

Routing rules

I want ALL of these routes to go to

https://shanebodimer.com

- shanebodimer.com

- www.shanebodimer.com

- http://shanebodimer.com

- http://www.shanebodimer.com

I want any 404 page to show a 404 page

I want pages not to have index.html (for example, this document is an index.html but you don't see it in the URL)

www to non-www

CloudFlare can handle the www to non-www redirect with rules, they even have a pre-made template for it: https://developers.cloudflare.com/rules/. It is free.

http to https

Cloudflare can also handle this with rules. I get 10 free rules for each domain which is plenty.

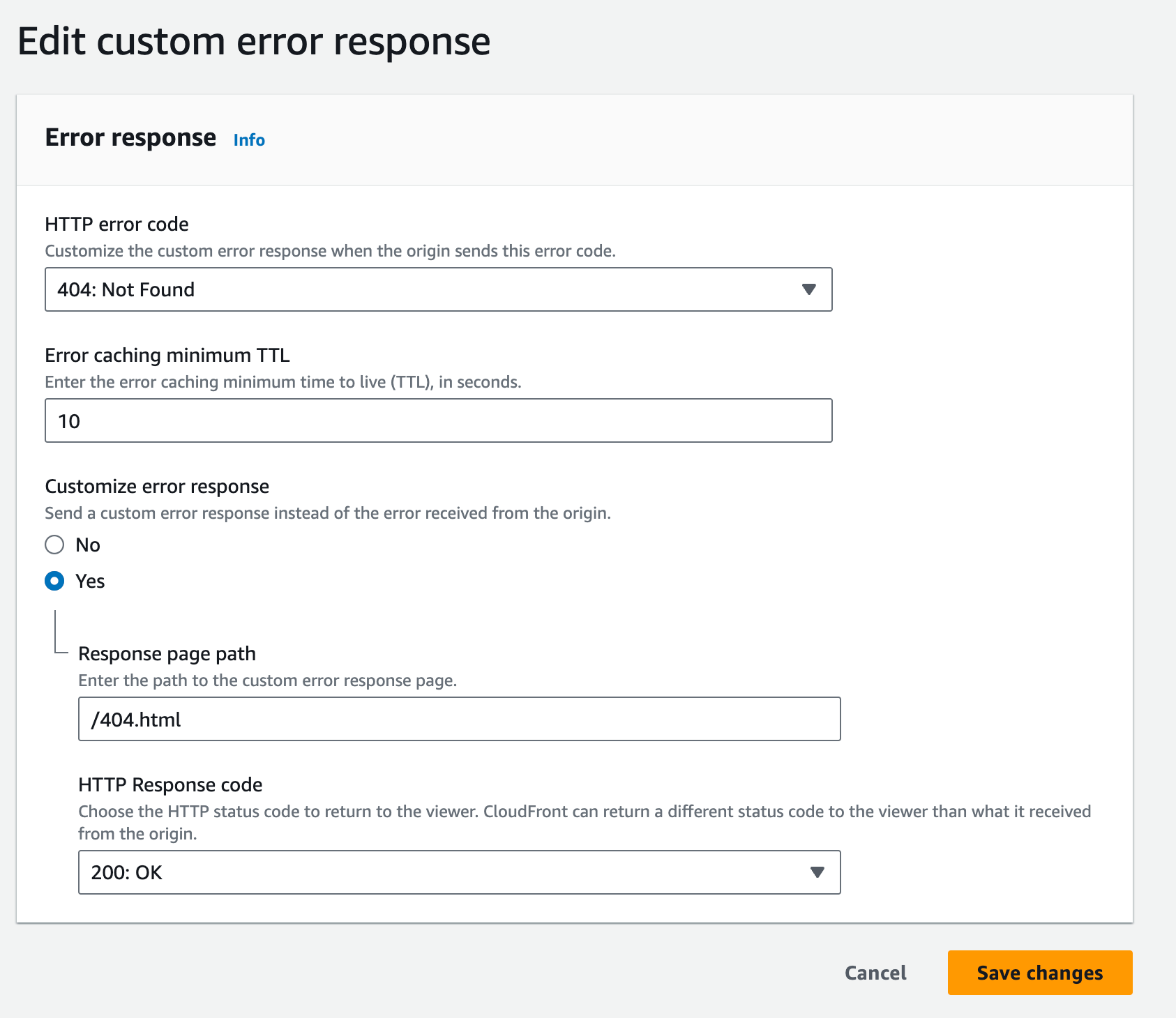

404

CloudFront handles this with "Error pages". I can just say on any 404,

return the 404.html page. This is free.

index.html

CloudFront handles this with "Functions". What it does is checks if the url doesn't have an index.html at the end, and then tells CloudFront to serve the index.html. I stole this code from Stack Overflow: https://stackoverflow.com/a/69157535

function handler(event) {

var request = event.request;

var uri = request.uri;

// Check whether the URI is missing a file name.

if (uri.endsWith('/')) {

request.uri += 'index.html';

}

// Check whether the URI is missing a file extension.

else if (!uri.includes('.')) {

request.uri += '/index.html';

}

return request;

}

I thought the "default root object" setting in CloudFormation would handle this but apparently not. I need this function for my subpages to work. Oh well. Pricing is cheap enough that I'm not worried:

Invocation pricing is $0.10 per 1 million invocations ($0.0000001 per invocation).

It works!!!!!!

That is all. Everything I did to safely host my static sites for a cheap as possible. I'm sure there are better ways but this was fun.

Next time I get bored on a weekend I'll turn this into a CloudFormation template so spinning up sites in the future will be faster.

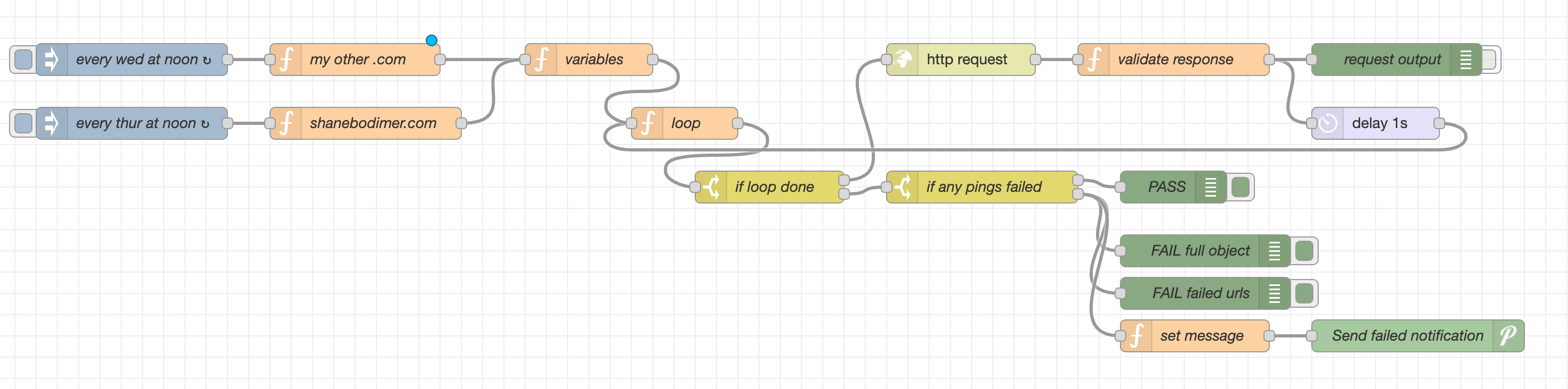

Bonus: Canaries

At work we talk about canaries a lot. A script or service somewhere that occasionally checks on a service to let us know if it is operating correctly. This is good for services that don't have the best monitoring.

Since I don't have servers or lambda to monitor when something is "broken", I have to rely on canaries to tell me if my sites are down.

I could do this with AWS or other cool websites like https://pingping.io/ but I've been really into Home Assistant lately and wanted an excuse to build on my home network.

I used the Node-RED Home Assistant addon and created a new page to run canaries. This is what it looks like:

Basically once a week, it will ping a handful of my URLs and look for certain text in the response. If that text is missing, my page is not serving correctly and I get a notification on my phone to investigate.

This is how I structured my tests:

const validateString = "Nova"

const string404 = "404 bro :("

const urls = [

{

url: 'shanebodimer.com',

find: validateString

},

{

url: 'https://shanebodimer.com',

find: validateString

},

{

url: 'http://shanebodimer.com',

find: validateString

},

{

url: 'www.shanebodimer.com',

find: validateString

},

{

url: 'https://www.shanebodimer.com',

find: validateString

},

{

url: 'http://www.shanebodimer.com',

find: validateString

},

{

url: 'http://www.shanebodimer.com/should404',

find: string404

}

]

msg.urls = urls

return msg;

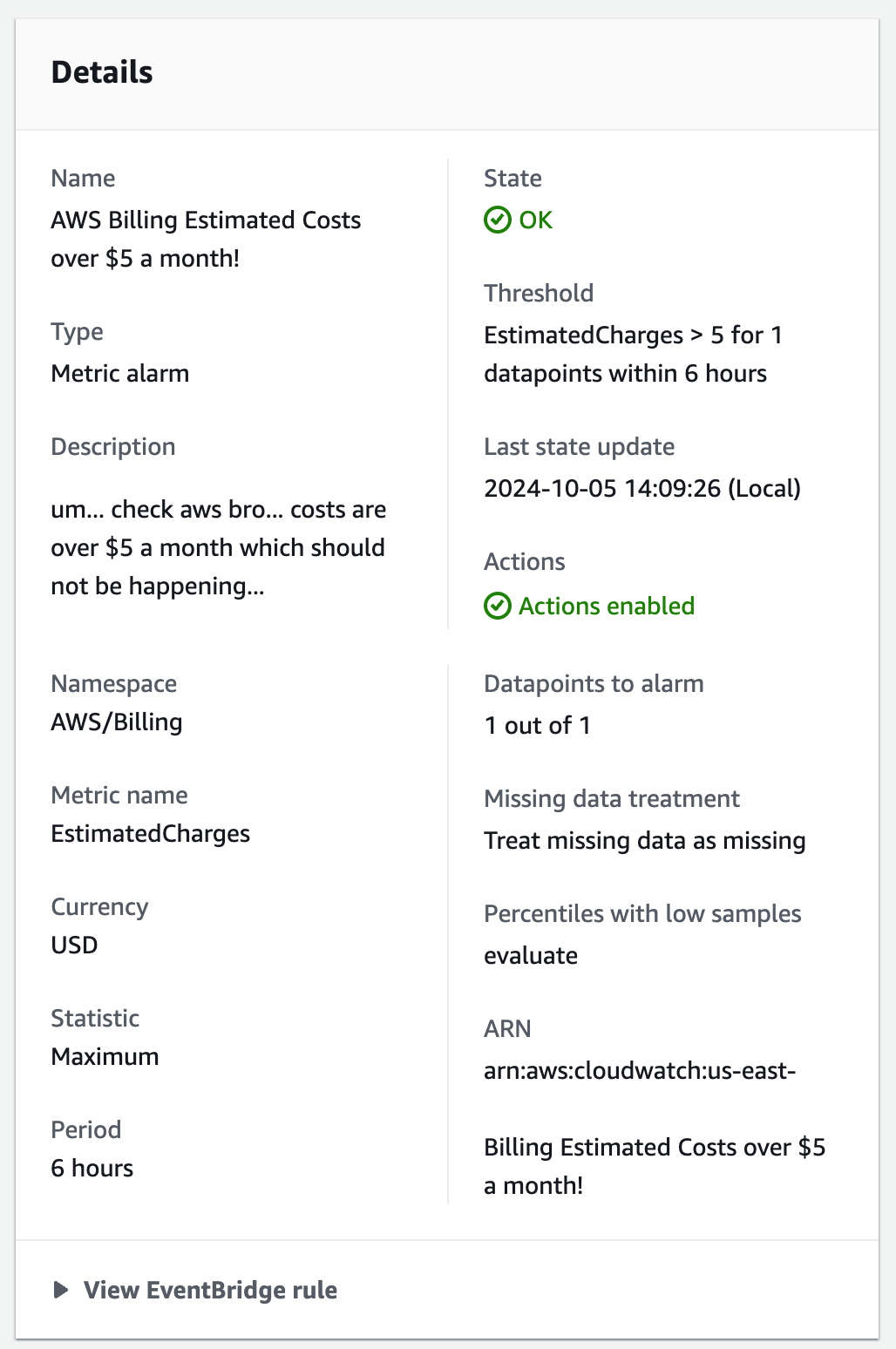

Bonus: Cost Alerts

The whole reason I did this was to save money and make sure I don't get charged too crazy. To further ease my mind, I set up billing alerts to send me an email whenever my estimated AWS monthly costs are above $5. This is what the config looks like. I just copied from AWS documentation: https://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/monitor_estimated_charges_with_cloudwatch.html#creating_billing_alarm_with_wizard